AI Detection by Modality: Images, Audio, Video and Text

How AI detection protects against misinformation and fraud from generative AI content across text, images, audio, and video.

Generative AI has given users around the world the ability to create content with a few keystrokes. Never has it been easier for someone to generate content.

As this technology has evolved, it has expanded across various modalities, each bringing its own set of possibilities and challenges. Initially, AI's foray into content creation began with text, enabling the generation of articles, stories, and even code, with surprising ease and coherence. Following text, the technology advanced into the realm of images, allowing for the creation of artwork and visual content that rivals human creativity. Soon after, audio generation capabilities emerged, giving rise to synthetic voices and music that are increasingly difficult to distinguish from their human-made counterparts. Most recently, the advent of AI-generated video has opened up new frontiers in content creation, from realistic animations to complex video sequences, all achievable with a fraction of the traditional resources.

On the other hand, the rapid rise of AI-generated content has introduced new risks and challenges, including the potential for misinformation, fraud, and intellectual property infringement.

Checking for AI in Text

In any given semester in high school, I'd find myself toying with text size and paragraph margins to make the minimum page length for my English class. I understand how students around the world are at least tempted to use these tools for writing reports.

While AI-generated text can undoubtedly ease the burden of writing tasks, it raises important questions about the value and purpose of human-written content, particularly in educational settings. As AI becomes more advanced, it's crucial to assess the potential implications of relying on machine-generated text for assignments and reports.

Beyond academia, distinguishing human-written text from synthetic AI content often has monetary consequences

How about our favorite Nigerian Prince who periodically shows up in our email inbox asking for a favor? Now, his previously broken English emails are personalized and written by AI. There's probably a GPT for that now. Or the countless work from home for $1k per month posts on social media flooding comments sections? Even product reviews sections are inundated with AI-generated content. How would we know what's AI or not?

To detect what is original and AI content, text AI detection systems come into play. Human written content still plays a large role in society and AI detection becomes an essential tool for preserving the authenticity and integrity of the written word.

The question does remain of whether the Large Language Models (LLM) get so good at writing that AI written text won't be detected, as some researchers are predicting for text. Will AI checkers be effective in detecting text generated by AI in the near future?

Picture Perfect AI Detection

Over 1.8 trillion photos are taken per year. Wow. With camera phones, this number continues to increase every year. As we scroll through endless feeds of people documenting their lives with pictures, GenAI has made taking out a smartphone, focusing and snapping look like hard work. With generative algorithms churning out photorealistic images, illustrations and art in seconds, distinguishing artificial creations from authentic snapshots grows vital yet increasingly difficult.

Face it, the days of six fingers and butchered text from AI generated images are fading quickly. To actually tell the difference, AI is needed to detect AI itself. More specifically, an AI detector that is trained to detect things humans cannot see.

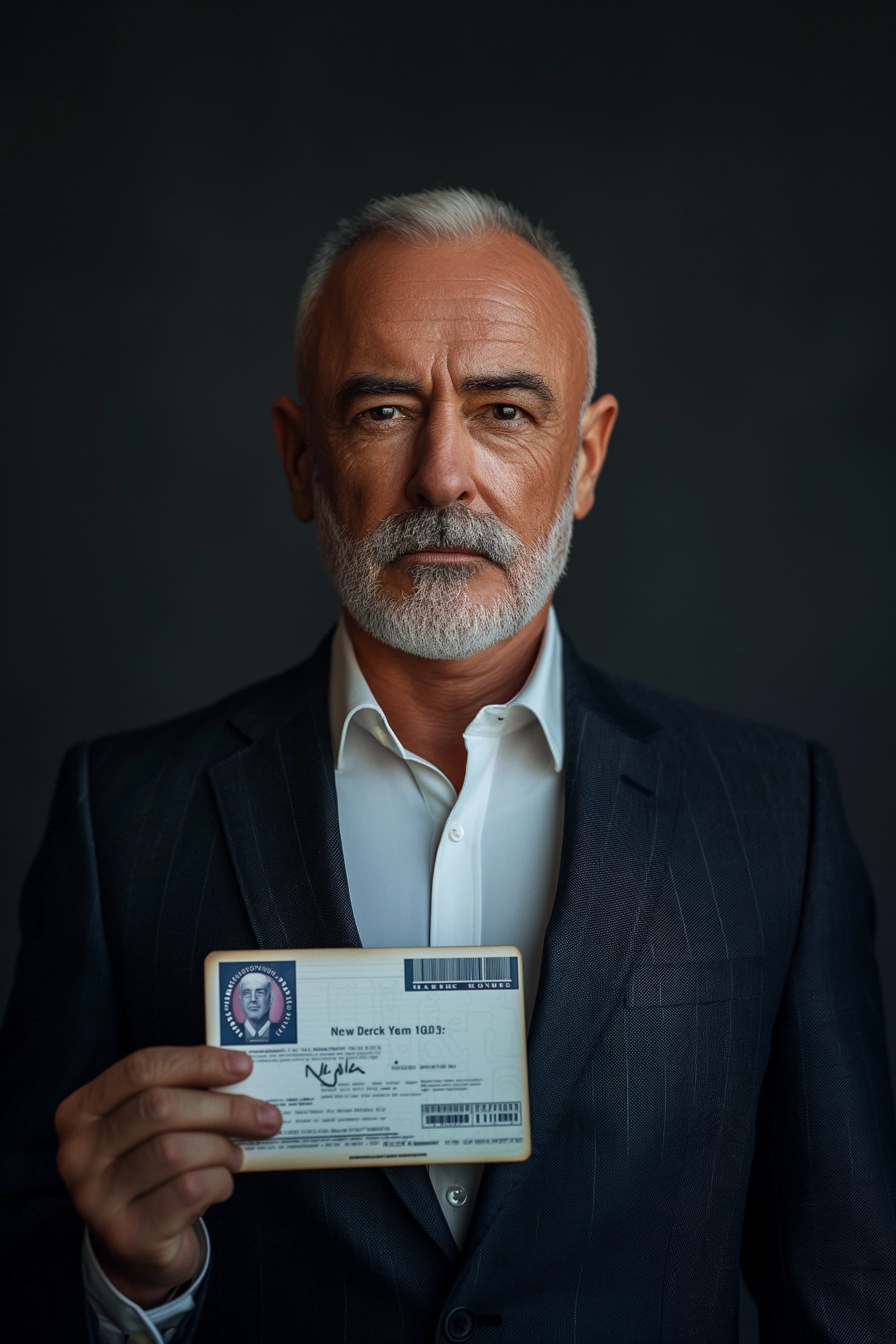

But what's the big deal of using an AI to create images with any detection report? Its potential for abuse also multiplies in the absence of an AI detector.

Bad actors could manufacture fake identity documents like passports to bypass KYC checks. Ecommerce competitors may generate brand damaging product reviews. Even worse, social media can be flooded with NSFW content and political misinformation that never occurred.

Just as virus scanners guard networks against malware, AI detection protects the informational ecosystem against visual misinformation.

Dialing in on Audio AI

If your mother called you and asked you for money quickly because she's in a pinch, would you question it? Probably not.

The sound of a familiar voice on the other end of a phone call is often all it takes to convey trust and authenticity. But what if that trust could be effortlessly mimicked and manipulated? With AI generated Audio, it only takes a 30 second sample of someone's voice to recreate that voice to say anything, such as an AI version of the US's president telling an entire state not to vote.

Ensuring public confidence in the electoral process is vital. AI-generated recordings used to deceive voters have the potential to have devastating effects on the democratic election process.

Checking audio requires a tool that can detect and check for whether or not it is AI or not. Just as AI can be used to generate these audio deepfakes, it also holds the key to identifying them. AI detection for audio involves sophisticated algorithms trained to analyze voice samples, detecting subtle nuances and discrepancies that differentiate genuine recordings from AI-generated ones.

By distinguishing bonafide vocal recordings from AI generated impersonations, AI audio detection offers a safeguard against coordinated disinformation campaigns, predatory scams, and voice data theft eroding security and trust worldwide.

Directing AI Checkers

AI Video has given every person the ability to create major studio like productions without the major studio. Looking to create a video for your brand or company? An AI Video Generator can do it. Between intelligent editing algorithms, lifelike avatars, and context-aware scene rendering, this technology promises to revolutionize workflows for creators and marketers alike.

But much like the rise of deepfake imagery, the same techniques fueling innovation also enable new fronts of manipulation and fraud.

Forged corporate announcements or political smear campaigns could erode public trust in institutions. Synthetic ransom demand videos impersonating executives might trick finance teams into fraudulent transfers, sometimes to the tune of $25m.

AI Detection as the Final Defense

As AI capabilities advance at a dizzying pace across modalities —be it text, images, audio, or video— our responsibility grows to thoughtfully direct and safeguard this technology for societal benefit. From smart assistants conversing naturally to algorithms generating lifelike images to producing studio-quality videos and mimicking human voices, AI has blurred the lines of reality.

Natural language models may expand information access, but also risks surrounding misinformation. Generative art tools boost creativity, however accountability still lacks on datasets and model fairness. Synthetic voice and video unlock new creative frontiers, yet bring unintended hazards surrounding deepfakes and fraud.

AI detection is the countermeasure to the escalating arms race between creators of AI-generated fakes and those tasked with safeguarding the digital ecosystem. By leveraging advanced algorithms and deep learning, these tools scrutinize the minute details that differentiate genuine and AI-generated content.

For sectors ranging from finance, where AI-generated audio can facilitate fraudulent transactions, to politics, where synthetic videos can manipulate public opinion, the stakes could not be higher. The implications of unchecked AI-generated content extend beyond individual incidents of fraud or deception. By investing in AI detection technologies and fostering an environment of transparency and trust, we can embrace the full potential of AI while safeguarding the integrity of our digital world.